Symmetries in Neural Networks

There was a phenomenal discussion on Twitter sparked by the following tweet. I was left with many more questions than answers, but I think this is a good thing. I summarize various ideas shared below.

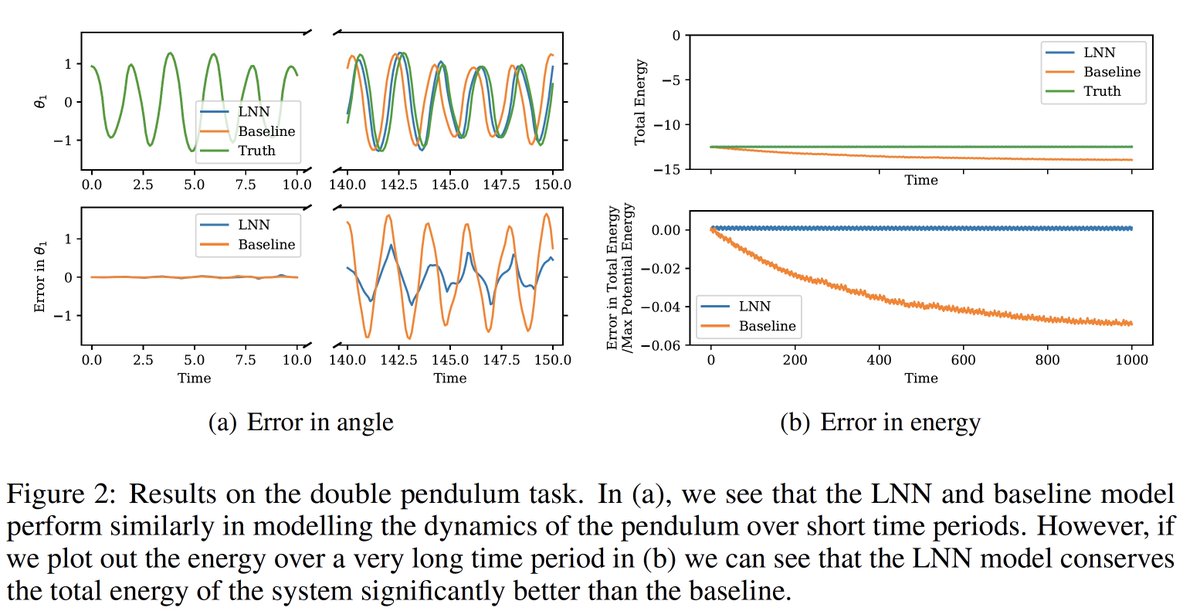

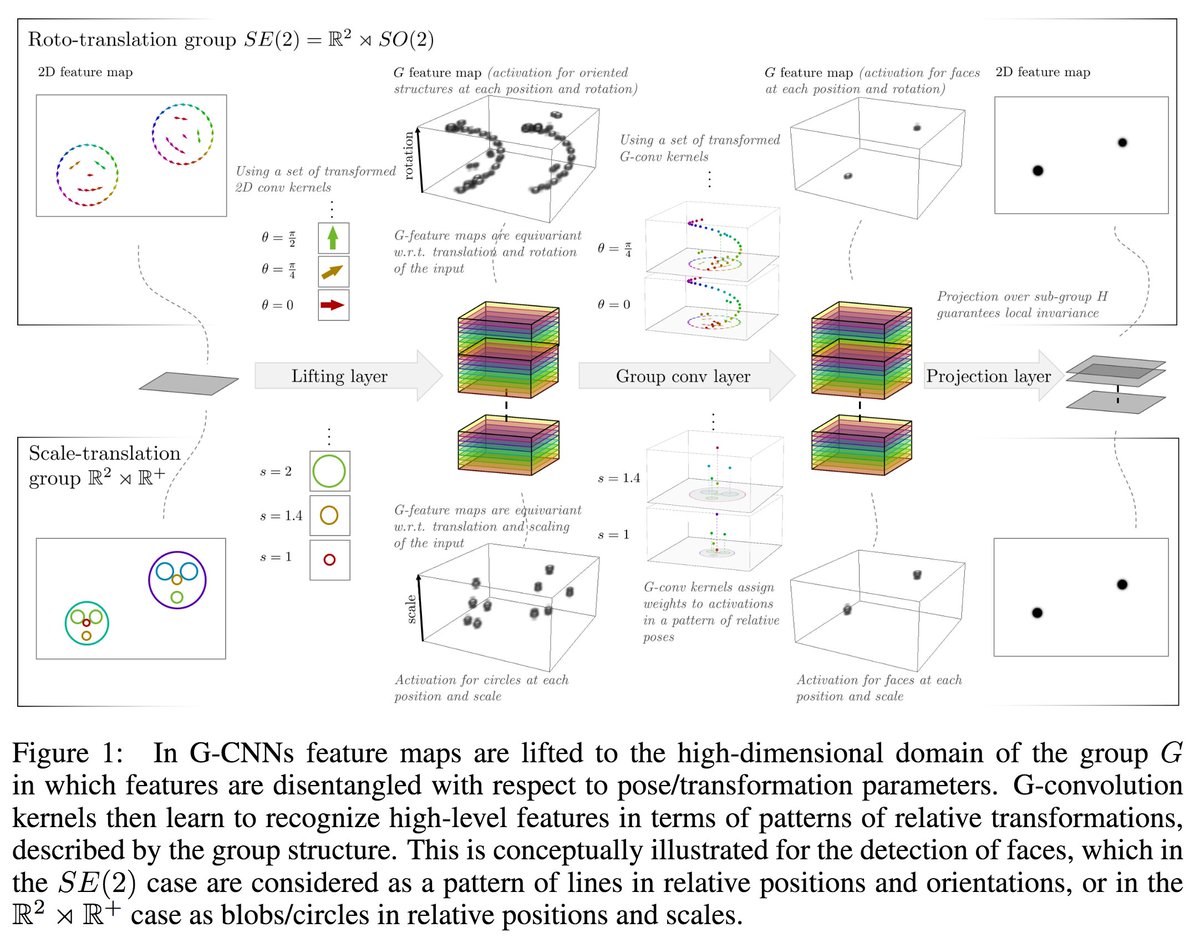

So 1) Lagrangian/Hamiltonian NNs enforce time symmetry, 2) Graph Nets enforce translational symmetry, and 3) Group-CNNs enforce rotational symmetry.

— Miles Cranmer (@MilesCranmer) April 17, 2020

But are there any NNs that can enforce an arbitrary learned symmetry?@wellingmax @DaniloJRezende @KyleCranmer?

Here’s a list of some interesting papers mentioned.

First, papers referenced in main tweet:

- LNNs - arxiv.org/abs/2003.04630

- HNNs - arxiv.org/abs/1906.01563

- Graph Nets - arxiv.org/abs/1806.01261(+ refs therein…)

- Group-CNNs - http://proceedings.mlr.press/v48/cohenc16.pdf

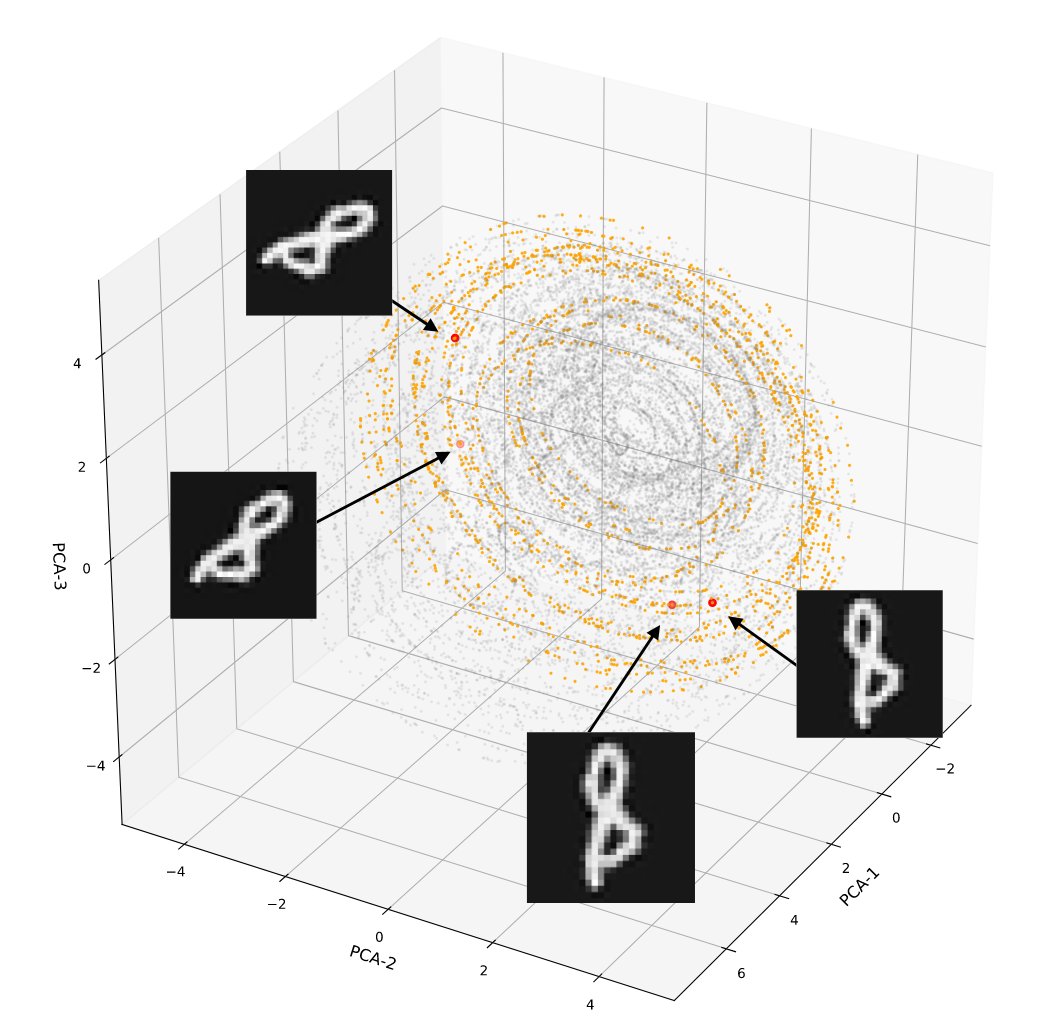

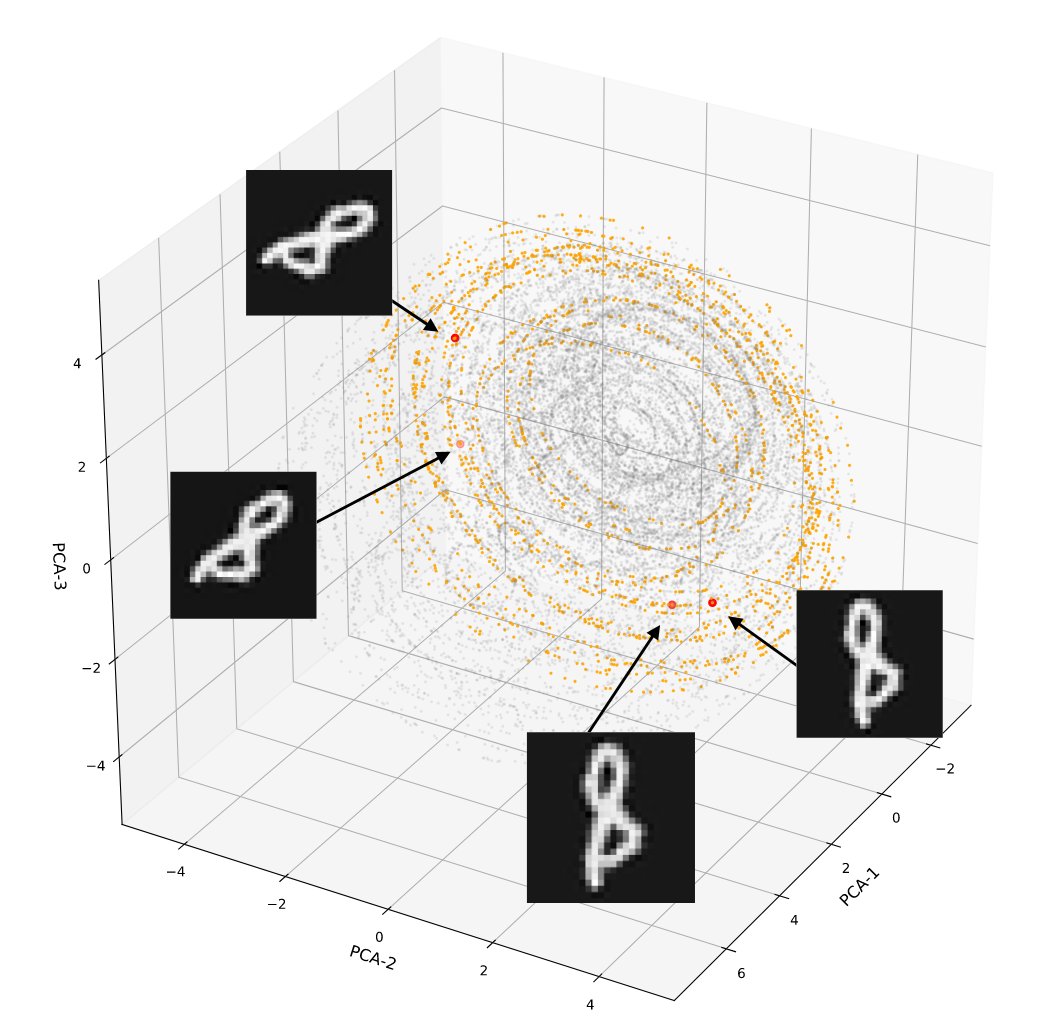

Now, some papers mentioned in the thread: @KrippendorfSven 2020- identifies symmetries by considering generating operators in a compressed latent space

@erikjbekkers 2019(images/grids) & @m_finzi - arxiv.org/abs/2002.12880 (alt. technique + extends to point clouds + Hamiltonian GNs) - Enforce symmetry in CNNs over a variety of Lie groups (2D rotational symmetry ~ “SO(2) Lie group”)

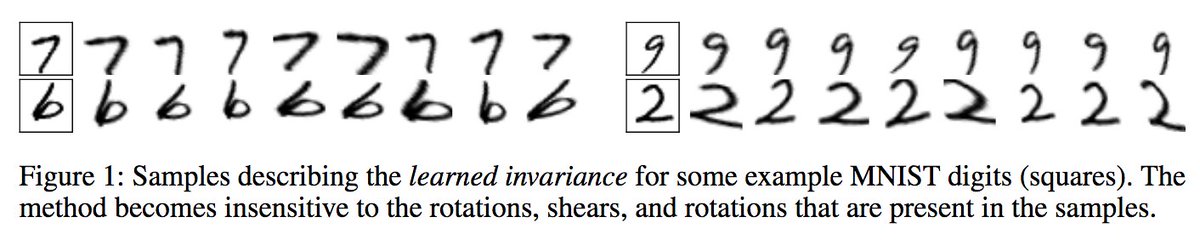

@markvanderwilk 2018- moves the symmetry enforcement from the architecture to the loss function

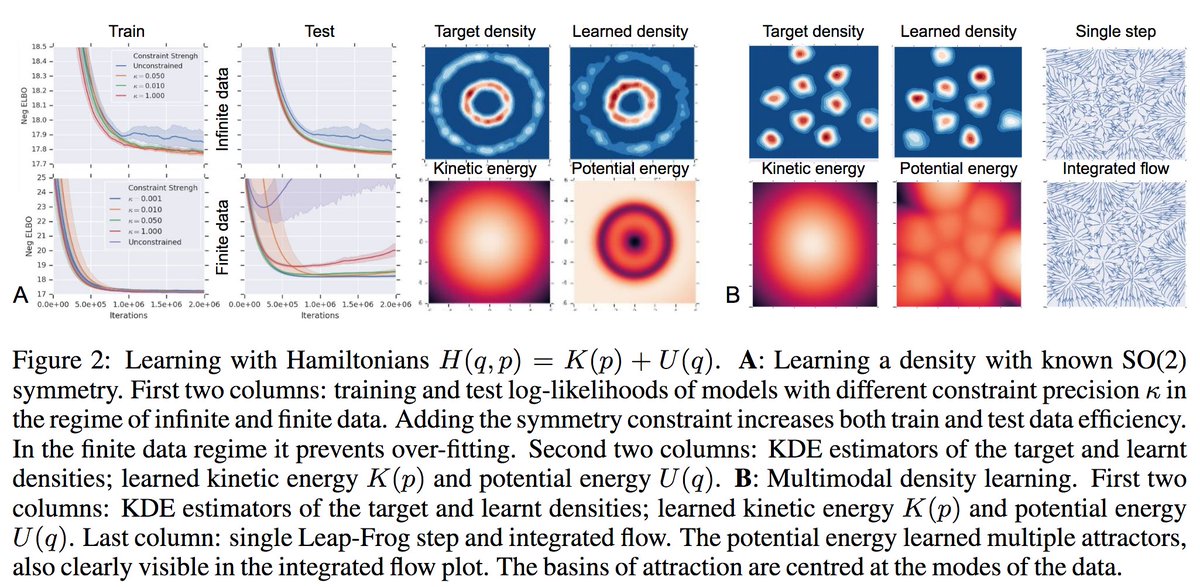

@DaniloJRezende 2019- demonstrates how to impose specific Lie group symmetries on densities in a Hamiltonian flow model

Kyle Cranmer:

Excited to share our newest paper combining machine learning and physics. We develop normalizing flows that respect symmetry to dramatically speed up "lattice techniques", which is a powerful computational approach to studying fundamental physics. https://t.co/6HTITx9l7F pic.twitter.com/ek8q7nqMx8

— Kyle Cranmer (@KyleCranmer) March 16, 2020

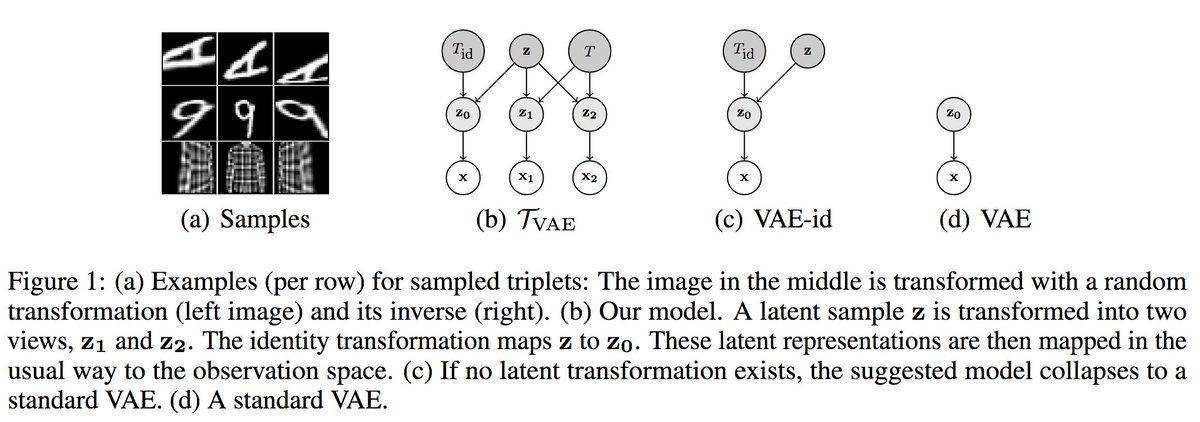

@plastiq 2019- Extends the VAE to learn symmetries by simultaneously learning a transformation between different latent states or “views”

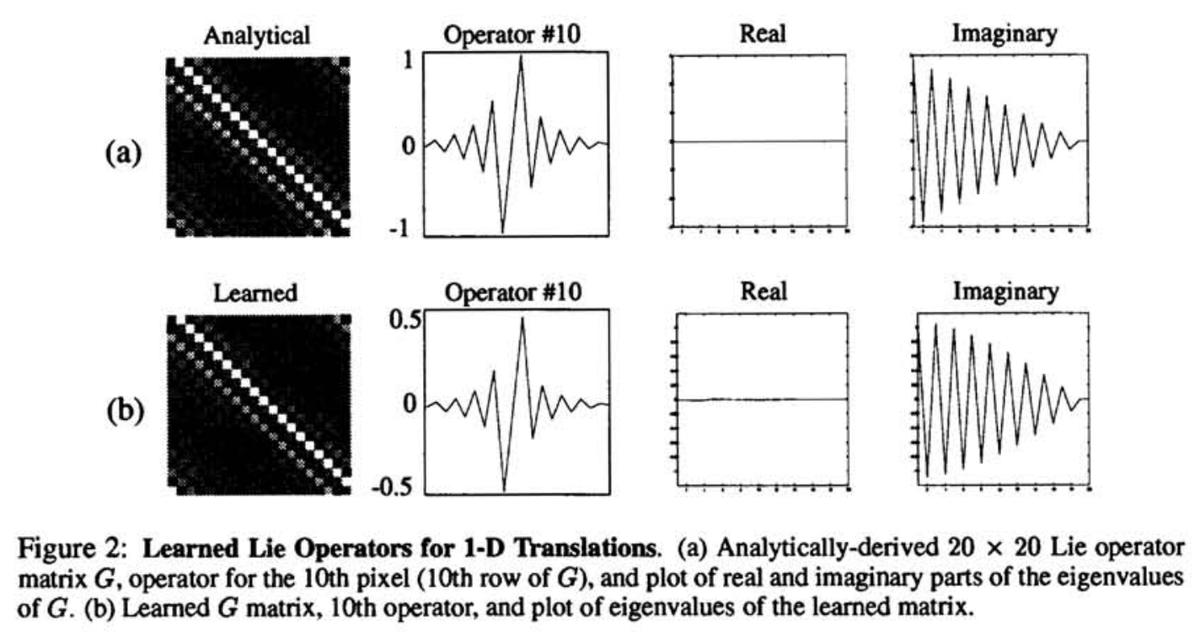

Rao & Ruderman (1999!)- demonstrates how to learn a Lie group operator (matrix) from data.

Ansemi et al 2017 - describes some weight regularizations to guide the NN in learning a symmetry rather than enforcing it