How to extract knowledge from graph networks

How to extract knowledge from graph networks

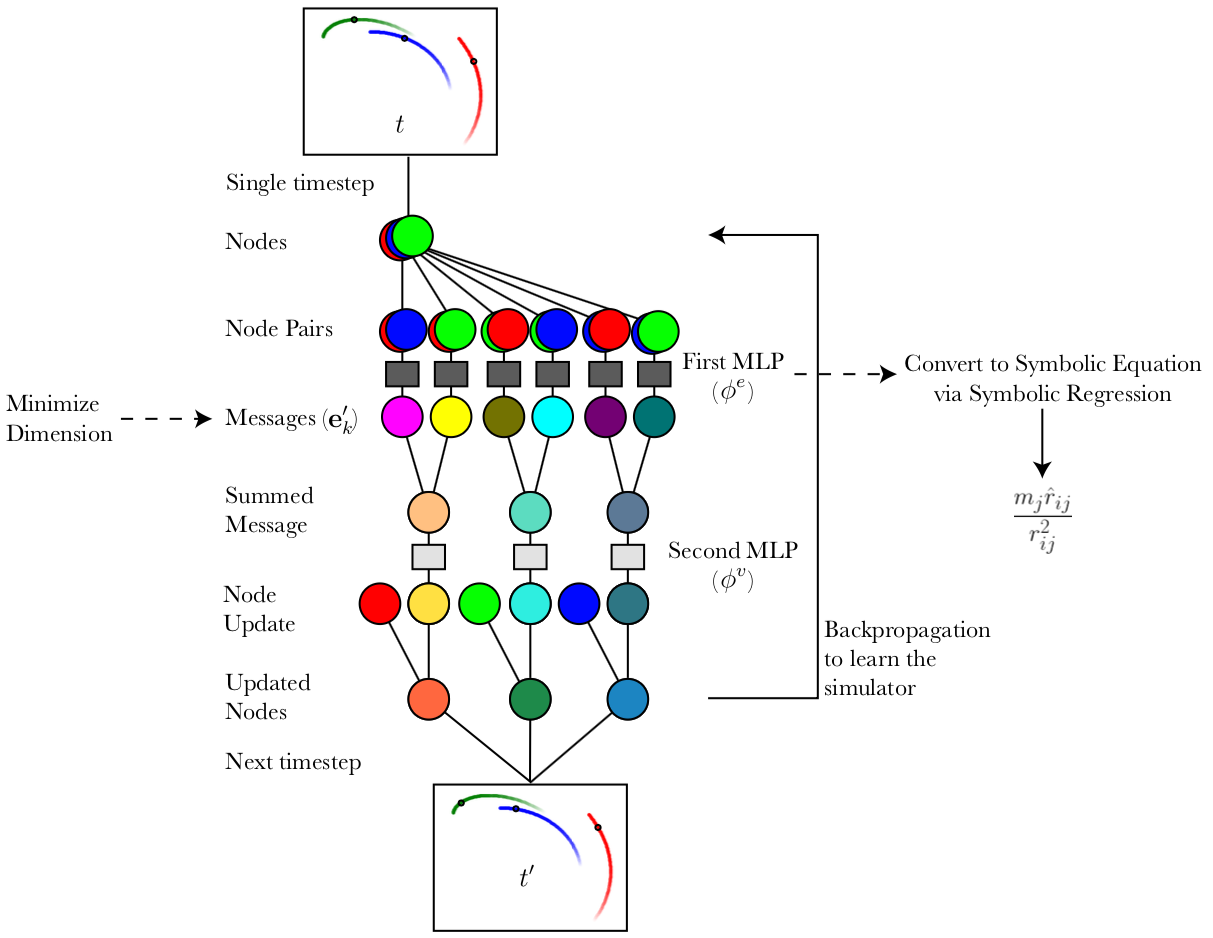

Learning Symbolic Physics with Graph Networks

We introduce an approach for imposing physically motivated inductive biases on graph networks to learn interpretable representations and improved zero-shot generalization. Our experiments show that our graph network models, which implement this inductive bias, can learn message representations equivalent to the true force vector when trained on n-body gravitational and spring-like simulations. We use symbolic regression to fit explicit algebraic equations to our trained model’s message function and recover the symbolic form of Newton’s law of gravitation without prior knowledge. We also show that our model generalizes better at inference time to systems with more bodies than had been experienced during training. Our approach is extensible, in principle, to any unknown interaction law learned by a graph network, and offers a valuable technique for interpreting and inferring explicit causal theories about the world from implicit knowledge captured by deep learning.